Re-Release: Defending Your Reality: Cybersecurity & Gen AI — Ben Colman

Every click, image, and video online now demands a second look. How can innovation safeguard authenticity in this new era?

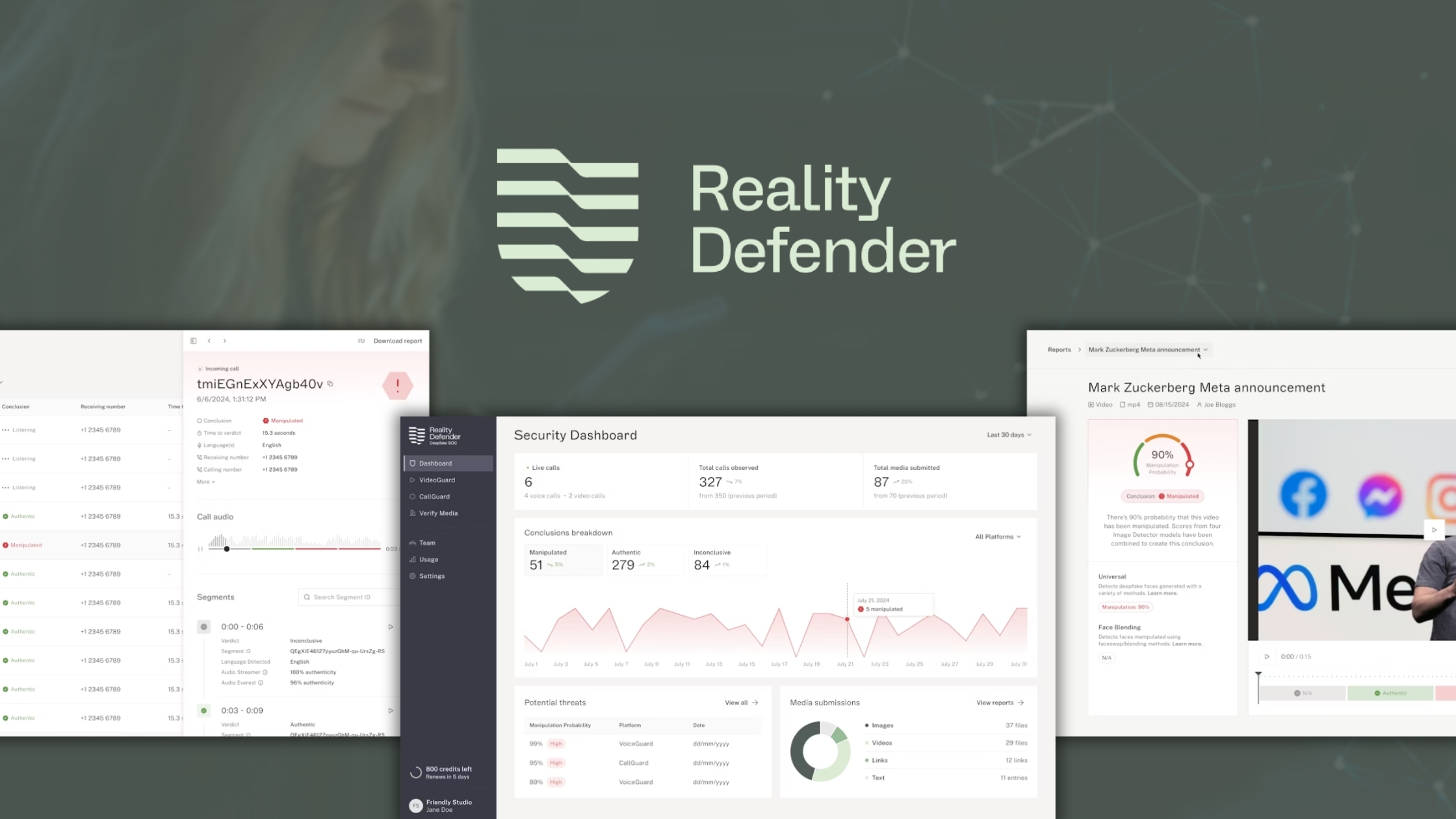

In celebration of Cybersecurity Awareness Month, we’re revisiting a June 2024 episode of the VentureFuel Visionaries podcast featuring Ben Colman, founder of Reality Defender. It is the leading deep fake detection platform that helps enterprises flag fraudulent users and uncover manipulated content.

In this episode, we dive deep into the world of AI, cybersecurity, and the innovative solutions being developed to protect our reality in an increasingly digital age. Whether you missed this episode the first time or are hearing it again with fresh ears, this conversation is more relevant than ever in today's digital landscape!

%20VFI%20Visionaries_Ben%20Colman.jpg?width=760&height=428&name=Thumbnail%20(Podcast)%20VFI%20Visionaries_Ben%20Colman.jpg)

Episode Highlights

- Rise of Deepfake Threats Across All Media Formats – Ben explains how generative AI has rapidly expanded beyond voice cloning to include audio, video, image, and text manipulation. This creates new cybersecurity risks across every digital channel.

- How Deepfake Detection Is Protecting Real-World Industries – The discussion explores how organizations — from banks to media companies — use advanced AI tools to verify authenticity, flag manipulated content, and prevent fraud in real time.

- Building Trust and Privacy in a Synthetic Media Era – He shares how deepfake detection models work without storing personal identifiers like face or voice prints, ensuring strong data privacy while accurately assessing what’s real and what’s not.

- Urgent Need for Regulation and Platform Accountability – Ben urges stronger leadership and faster regulatory action to ensure platforms take real responsibility for moderating AI-generated content.

- Growing Threat of Real-Time Voice and Video Fraud – Looking ahead, he warns of a surge in voice-based scams and deepfake-enabled impersonations, highlighting the importance of proactive, real-time detection tools to protect individuals and organizations.

Click here to read the episode transcript

October is Cybersecurity Awareness Month, and to mark the occasion, we're revisiting one of our most timely and impactful conversations. In this re-release of VentureFuel Visionaries, we sit down with Ben Coleman, founder of Reality Defender, the leading deepfake detection platform that helps enterprises flag fraudulent users and uncover manipulated content.

As cyber threats evolve at lightning speed, especially with the rise of AI-generated audio, video, images, and text, Reality Defender is at the forefront of protecting businesses, individuals, and society from deception. Whether you missed this episode the first time or are hearing it again with fresh ears, this conversation is more relevant than ever in today's digital landscape. Welcome to today's episode of the Venture Fuel Visionaries podcast.

Vanessa Ivette Rosado

I'm Vanessa Rosato, and I am pleased to be joined by Ben Colman, co-founder and CEO of Reality Defender. Ben, welcome to the show.

Ben Colman

Thanks, Vanessa.I'm super excited to be a part of the show.

Vanessa Ivette Rosado

So I wanted to start, you know, you guys have been in the press a lot lately. We're gonna talk a little bit about what this interview is part of a series that we're doing featuring the startups from the generative AI cohort at Comcast NBCUniversal LIFT Labs Accelerator earlier this year. But for those, if they have managed to miss it, which is difficult, but who is Reality Defender? Who are you guys? What do you do? And tell us a little bit about your work.

Ben Colman

Yeah, thank you for this opportunity to humanize Reality Defender. Well, as our name suggests, we defend reality. We're a research-led team that started off as a nonprofit applied research team that developed early novel approaches to identifying deepfaked or AI-generated or manipulated media, and started putting out a number of white papers.

And then the world caught up to us, and suddenly generative AI is more than a buzzword. And as fast as moving on the generative side, it's moving equally fast for every type of hack or breach or identity fraud or deepfaked revenge porn. It really only takes the imagination to think about how to take these interesting or educational or business-related generative models and use them for much more nefarious, more dangerous techniques.

So, to start off, there are three of us, Gaurav, Ali, and myself, three co-founders. And now we've grown to about 27 people across full-time, part-time, and research partners based in the heart of Manhattan, in downtown Stonestrow from the World Trade Center. And we're proud to be a New York startup. We admire our friends and partners in San Francisco, but we firmly believe that New York City is seeing a renaissance in tech, and it's great to be straddled by so many tech-partnered industries, whereas finance or advertising or media.

Vanessa Ivette Rosado

And why did you start or found Reality Defender? I mean, you talked a little bit about it, and we're also based in New York. We're remote, fully distributed, but our headquarters is in Brooklyn, actually. And New York is, as a marketer myself, by trade or by training, a center for media, journalism, advertising, and finance, which are some of the sectors you just mentioned that are disproportionately affected by this challenge of deepfakes, although it's relevant for everyone.

But can you talk a little bit about why you started Reality Defender? Why focus on this problem specifically in terms of deepfake? And what your unique value proposition is in the marketplace as it relates to deepfake?

Ben Colman

Yeah, on a very personal level, I've been working at the intersection of cybersecurity and data science for over a decade. I've worked at Google, I've worked at Goldman Sachs, I've done some research for different global government groups. And a few years ago, I was working for a large global investment bank that was faced with a number of different voice-based fraud attacks.

And what most fakes try to do is focus on voice matching, understanding what's called a voice print of Vanessa's voice or Ben's voice. And this was just at the beginning of different types of text-to-voice techniques. I think we all remember our first computer, we type in a word, it says it is very computer sounding, kind of like a robot. And that was starting to pick up.

We saw with, I think at the time it needed a few hours to do our Vanessa's voice and a few thousand dollars of cloud compute to make a voice match to kind of replicate your voice, to do text-to-speech. And the writing was on the wall, that was gonna become a much bigger issue. What I didn't realize was it's gonna be an issue across all modalities, across audio, across video, across image, and across text as well.

So when we first started the company, we're only focusing on one of these types of media. And as we continue to build the team and develop our own research, and also meet researchers finishing their PhDs and their master's degrees, we realized there was a lot of different overlap on the research side between video and audio, between images and video, between audio and text. And so what we've organically developed is an expertise in developing state-of-the-art models to detect fakeness or AI generation manipulation in any kind of media you can find online.

And the reason for that is I have two small kids and the world they're gonna face in the next few decades, a little in the next few years, is a world where it does not take a few hours of voice, takes a few seconds of voice, to make, as the last few months, a perfect big voice that sounds just like yours or mine. And so while I think we had a hunch early on this was gonna be a big issue, we did not realize how quickly the world was gonna accelerate.

We thought it would take years. And it's been months. Now, I think with the latest Microsoft model, you need less than 10 seconds of someone's voice to make a perfect voice match. Now, that voice won't know how to talk like Vanessa or like Ben, I'm not sure what the slang we use, but outside of specific verbiage, it'll sound just like Vanessa or just like Ben.

So what part of our team is focused on developing research in this space? That's the majority of our team. And another part of our team is focused on taking that research and allowing it to be commercialized in a way that is simple to use for a non-technical audience. And those audience members range from government groups looking at misinformation online. For example, pick your top button, global event or election. I'll let the audience pick one, but I'm sure they could pick one online that already has a lot of fake media.

Oftentimes, you don't know who or where the media is coming from. It's been shared and re-shared. And our platform is able to, in real time, identify how the media has manipulated, if it has manipulated. It also helps you interpret and visualize where the anomalies are explained to a non-technical audience, how and why face or the voice or the biomechanics, the breathing and eye blinking, the head movement may be indicatively fake.

And what's really interesting about how we do what we do is that we don't have any ground truth, meaning we will never be able to compare to a real version of what it is. This also means that our clients, whether they're governments, banks, or media companies like Comcast and NBC, can rest assured that we're not recording or retaining Vanessa's voice, Vanessa's face, or Vanessa's fingerprint.

For companies that do use an underlying voice print, face print, fingerprint, or any type of personally identifiable information, if they get hacked, it's not like you, Vanessa, can reset your face or reset your voice. And so what that means is everything we're doing is probabilistic. Our highest confidence level is 99%, because we never actually have a perfect, pure version. We always assume it's completely anonymized and we don't have any way to accept any information as far as name, place, or anything else.

And so one of our tools is checking out Vanessa's voice. You know, we work with banks, scanning real-time calls and our phone calls to see if perhaps somebody's impersonating Vanessa to authorize a wire transfer. We work with large media organizations within Comcast and other networks as well that are scanning for editorial fact-checking. So getting media from a war zone or from an election or something topical within sports and scanning it all.

We're also working with different groups across the tech ecosystem, looking at things like social media posts that may be a state-level actor or a hacking group trying to impersonate or destroy someone's reputation or affect public opinion about a person, about a company, about an elected official. And wrapping that all together, our platform just looks like a simple website where you can drag and drop a file or a formal programmatic use case.

A company, a bank, or a government group could just stream millions of files or real-time phone calls to us electronically and we'll stream back risk scores, which they can use to determine whether or not they ask Vanessa for more information. Hi, Vanessa, I would like to confirm this year you were born and which school you went to for college. These questions you get from a bank and they don't know if you're calling from a new device or perhaps it's so obvious they think that it gets escalated to a fraud team immediately.

And I think where we see the world moving is a place where every piece of media, whether it's an email, whether it's online, whether it's on the phone, whether it's in a Zoom call, will be scanned to identify how much or how little AI was used or generated. I'm not saying that AI is bad at all. Right now, this podcast is our real voice, but it does use a lot of bandwidth. If we're on Zoom, it's a lot of bandwidth as well.

In the near future, we'll probably use some type of AI avatar to speak as us, and it'll be much less bandwidth needed. But I think it'll still be important to document how and why and where AI is being used to establish the truthfulness of who or what is being discussed. I know that was a bit of a long-winded answer.

Vanessa Ivette Rosado

I mean, you are in AI access, and Ben, I know we didn't talk about this in the prep, but you're talking about Reality Defender's role in enabling the scale of the technology to do all of the wonderful things that it can do. In the name, Reality Defender, and a lot of the content around Deep Reality, they talk about deepfakes, but it is much more broadly around this question of cybersecurity. And as the world continues to evolve in a direction where everything we do is mediated, I mean, you just said it, right?

Even the messages I send my son sometimes are videos of me when I'm away from home and he's bored. So everything we do is mediated, and as AI becomes more broadly integrated into those mediated contact moments, how do you think about deepfakes more broadly? Because maybe when people hear "deepfake," they think of the one sensational moment when they saw a piece of news that was fake or something like that. But how do you talk to people so that they can think about this more broadly, beyond the cybersecurity issues that are broadly affecting all industries or have the potential to affect every kind of…

Ben Colman

Yeah, I think the challenge working in cybersecurity is that as much as we believe it's the most important digital space to focus on to most average people, it's scary and confusing.I think that deepfakes and generative AI, outside of the walled garden of folks like you and me who read the blogs and are tracking everything going on with, I guess, OpenAI over the last few days, most people just aren't really exposed to it until their email is hacked or they receive a very personal phone call from someone pretending to be a loved one, saying that they got into a car accident to make money because they went to jail.

Then, lo and behold, they wire money to someone overseas who is, in this case, executing a deepfake voice attack on somebody. A lot of what we're doing is focused on education, but also on much-needed regulations because the onus should not and cannot be on the average consumer to become an expert at identifying synthetic media.

The analogy that we typically use is that, because there are laws in place, you and I don't have to be experts at identifying ransomware, advanced persistent threats, or normal computer viruses in our email. It just automatically scans for it because that person is not qualified nor an expert in analyzing raw code in the same space and synthetic media.

Average online web users, let alone experts in our team, cannot tell the difference between real and fake because technology has advanced so much. And if experts can't tell the difference between real and not real, our parents, our children, our grandparents just don't stand a chance. So it's this kind of vicious cycle of fear and confusion.

We look to our elected officials and regulators to really move quickly on this, given that other countries and regions — the EU, the UK, Taiwan, Singapore, Japan, and India — already have laws on their books, at minimum requiring platforms to indicate whether something is indicatively AI-generated or manipulated. But here in the US, for a variety of reasons, it is still taking time. So I think we're moving in the right direction. But between now and then, a lot of people are going to have a lot of issues trusting online content.

Vanessa Ivette Rosado

A few weeks ago, I had the pleasure of speaking with Jun of Monterey AI, also from the cohort. And I did not have a question about the regulatory environment or AI regulation. But I am going to ask you only because it has come up and not necessarily for like what your point of view is on what AI regulation should be. But you've just talked about something that intuitively, right, like common sense will make sense. Like if an expert can't detect it, how can an everyday user be expected to navigate it? What advice do you have for elected officials who might be looking at this phase trying to learn about it?

Ben Colman

You know, I'd say we need a strong leadership approach on this because we've seen time and time again that if you allow the platforms to police themselves, they'll do the bare minimum. And if you pick your favorite social media platform right now, professional networking platform, it's a multi-step process to flag something if you think something is manipulated.

And only then does it get kicked to an outsourced, typically underpaid, under-resourced content moderator who not only is not qualified to score the media, but they look at only horrible things all day and they have a very short lifespan in their roles.And when this can be done completely programmatically upon upload, case in point, not just things that are dangerous to individuals, but also dangerous to the markets.

A few months ago, there was a photo that appeared to be an explosion at the Pentagon, which we identified within seconds that it was a diffusion-based, generally thought used to create it. But across, you know, TikTok and Twitter and Facebook and Snapchat, Telegram, et cetera, it was shared and re-shared and led to a hundred billion dollar flash crash in the market. Some will say, oh, well, the market came back, but in those seconds or minutes, you know, a lot of people had a lot of challenges.

And the other problem is once it's shared and re-shared, it leaves online media and goes to text messages. And people like my parents, they saw it a few times, they forwarded it to me. It must be real. They got it from poor people. So anyhow, to your question about what people should think, people should push the platforms they use and elect officials to not just do more, but just to do something and not trust the platforms themselves to flag everything because that only works if everyone's playing by the same rules.

And you wouldn't trust a hacker to follow the right rules and watermark their content because they're just not going to. Or they'll find a way to fake a watermark, get through all the moderation on a platform, and try to challenge public opinion on an election or a person's reputation.

Vanessa Ivette Rosado

Yeah, there are so many interesting cases, particularly coming out of Europe, where years ago, the platforms were given an opportunity to self-moderate, and those tests failed because it's just a very difficult task for all of these things that you just talked about. What advice do you have for organizations that are trying to take preventive action to protect themselves against this fake content now?

So, less about government action; this is for private industry. How do you advise them, or for folks listening, saying, "Hey, this is very interesting. Okay, this would be a great solution for us." Other than emailing you immediately, obviously, what do you say?

Ben Colman

Yeah, I mean, my email's ben@realitycenter.com. We're happy to provide access to our solution. We don't think there's any way that anything outside of software can solve this issue and we can do it really efficiently and cost-effectively. And our goal is that every piece of media is scanned upon upload or upon share, not after it's gone viral and been shared a billion times. There's no way to put the toothpaste back in the tube, as they say, once it's been re-shared. Even if it is negatively fake, it's already too little, too late.

Vanessa Ivette Rosado

You've talked a lot about how you have evolved, your research has evolved over time and the platform has evolved over time. What can we expect? What does the future look like for Reality Defender? What's coming in terms of projects or new services that you'll be offering in the next six to 12 months?

Ben Colman

I think we've been successful in taking a lot of our asynchronous models, uploading a file that's already happened, a recording of an audio clip or video or an upload image or text and moving to more real-time architecture. So we started off with audio, so we're supporting a number of global banks and scanning real-time phone calls, which is a huge amount of effort from our engineering and research teams to get going. And now moving into video as well.

So we're looking toward a future where real-time video, for example, Zoom or Google Hangouts will be de-picked. Currently, it's a little too expensive for an average hacker to do continuously, but the prices are dramatically shrinking. So we're looking forward to the next quarters of rolling out real-time de-picked video detection to see whether Vanessa's face or Ben's face and voice are real or are fake and why.

Vanessa Ivette Rosado

This makes me wonder if that Zoom video enhancement is gonna go away, but I'll save that question for another day.

Ben Colman

Yeah, but what you bring up is a really interesting question about anyone with an iPhone or Android device or using a social media platform or using different beautification filters. I'd love to remove all my wrinkles. I'd love to remove them all, unless I'm gonna make my chin look more like a superhero. Is that fraud? Is it not fraud? So a lot of work to be done in different areas that are really a gradient of how AI is adjusting what we see and hear.

Vanessa Ivette Rosado

Yeah, there's a great, now with the holidays upon us, there's a great ad promoting one of the latest smartphones that has that auto-correct photo feature where you can select… You can make all your friends smile at the same time. And that's a very oversimplified version of what you're talking about, but it is a good question in terms of policies, right? What do we consider deepfake versus just media editing?I don't know.

Ben, what trends or developments are you keeping an eye on for 2024? Obviously, there's been a lot of talk about the AI space in general in the last year, particularly in the last couple of weeks, as you look ahead beyond just Reality Defender, but within the industry, what are you keeping an eye on?

Ben Colman

Yeah, I mean, I think an area that's gonna affect everyone in the US and globally over the next 12 months is a dramatic increase of voice-based fraud against their credit card company or their banks or their loved ones. And in the last few years, there's been a real growth of voice biometrics to match Vanessa's voice, Vanessa's voice print. And those techniques are being defrauded by deepfakes and by generative AI.

So we're going to see a big push from the provenance side of matching voices to the inference side of detecting that, while it sounds, looks, and feels like Vanessa's voice, it is actually leveraging AI to have Ben make it appear the best saying whatever it is that, you know, "please let me have a bank account," "I forgot my password," "please execute a wire transfer," or "I'm in trouble, I need to wire money to this account overseas," things like that.

So I would say, over the next 12 months, we're gonna see the explosion of voice-based fraud just because of the ease of accessing tools that are either low cost or actually free online or on social media apps or on mobile apps as well.

Vanessa Ivette Rosado

Ben, before we get you out of here tonight, where can our listeners go to learn more about you, about Reality Defender and about your work?

Ben Colman

We have a whole range of educational documentation materials on our website, www.realitydefender.com. My name is Ben Colman, my email's ben@realitydefender.com and we'd love to be of service, love to help organizations, government groups, nonprofits. If you have an issue around deepfake fraud, we're here to serve.

Vanessa Ivette Rosado

Awesome, Ben, thank you so much for your time today and good luck.

Ben Colman

Thank you.

Thanks for joining us for this special re-release of VentureFuel Visionaries in honor of Cybersecurity Awareness Month. The threats may be evolving, but so are the tools and innovators working to keep us safe. Ben Colman and Reality Defender remind us that vigilance and innovation are our best defenses against deception in the digital age.

If you found this conversation valuable, be sure to subscribe, share it with your network and check out our other episodes featuring trailblazers reshaping the future. Until next time, stay curious, stay innovative, and most importantly, stay secure.

VentureFuel builds and accelerates innovation programs for industry leaders by helping them unlock the power of External Innovation via startup collaborations.